Bert And Ernie's Great Adventures ABC iview

Here is the link to this code on git.. 3. Training Model using Pre-trained BERT model. Some checkpoints before proceeding further: All the .tsv files should be in a folder called "data" in the.

EXCLUSIF ! Groupe Bert une prouesse de transporteur Transportissimo

Rev. Robert Bryan, half of the influential Maine humor duo "Bert and I," died in Sherbrooke, Quebec, on Wednesday. He was 87. Bryan's comedy partner, Marshall Dodge, died in 1982. The first.

Accueil Bert&You

Below, you can find a very, very limited list of fine-tuned versions of BERT: BERT-base-chinese. A version of BERTbase trained for NLP tasks in Chinese; BERT-base-NER. A version of BERTbase customized for named entity recognition; Symps_disease_bert_v3_c41. A symptom-to-disease classification model for a natural language chatbot.

What Should You Expect With Google's Bert Update? Shane Carpenter Strategic Communication

BERT is a pre-trained unsupervised natural language processing model. BERT can outperform 11 of the most common NLP tasks after fine-tuning, essentially becoming a rocket booster for Natural Language Processing and Understanding.

'Sesame Street's' Bert and Ernie are more than just friends, says writer

Provided to YouTube by Sesame Workshop CatalogBut I Like You · Bert & ErnieSesame Street: Bert and Ernie's Greatest Hits℗ 2018 Sesame Workshop under exclusiv.

Are Bert and Ernie Gay? We Checked the Research. Pacific Standard

BERT&YOU carbure à l'électrique Premiers tours de roues pour ce porteur Renault Trucks E-TECH qui circule désormais sur les routes du sud-est autour de la capitale phocéenne .. Découvrir Loi climat et résilience, BERT&YOU déjà prêt !

Bert and Ernie Are Puppets, Not Homosexuals Caffeinated Thoughts

0:00 / 5:24 Bert and I Piss Boys 180 subscribers Subscribe Subscribed 297 Share 53K views 11 years ago Originally performed by Marshall Dodge and Bob Bryan. Bert and I holds a really special.

GARONS Bert & You a inauguré sa nouvelle plateforme logistique

BERT is an acronym for Bidirectional Encoder Representations from Transformers. That means unlike most techniques that analyze sentences from left-to-right or right-to-left, BERT goes both directions using the Transformer encoder. Its goal is to generate a language model.

Hey Bert Do You Think We Could Try This at Home? Bert and Ernie Watching a Bodil Joensen Porno

BERT uses Wordpiece embeddings input for tokens. Along with token embeddings, BERT uses positional embeddings and segment embeddings for each token. Positional embeddings contain information about the position of tokens in sequence. Segment embeddings help when model input has sentence pairs. Tokens of the first sentence will have a pre-defined.

Ernie has a point Bertstrips Know Your Meme

Source. The BERT Base architecture has the same model size as OpenAI's GPT for comparison purposes. All of these Transformer layers are Encoder-only blocks.. If your understanding of the underlying architecture of the Transformer is hazy, I will recommend that you read about it here.. Now that we know the overall architecture of BERT, let's see what kind of text processing steps are.

Bert& You embarque une cargaison drômoise CFNEWS

Bidirectional Encoder Representations from Transformers ( BERT) is a language model based on the transformer architecture, notable for its dramatic improvement over previous state of the art models. It was introduced in October 2018 by researchers at Google.

Bert & Ernie Imgflip

BERT, short for Bidirectional Encoder Representations from Transformers, is a Machine Learning (ML) model for natural language processing. It was developed in 2018 by researchers at Google AI Language and serves as a swiss army knife solution to 11+ of the most common language tasks, such as sentiment analysis and named entity recognition.

Here’s to You, Dr. Bert AME Blog

Sing along to over 30 minutes of songs, including classics like Dance Myself to Sleep and Doin' the Pigeon in this compilation with Sesame Street's best budd.

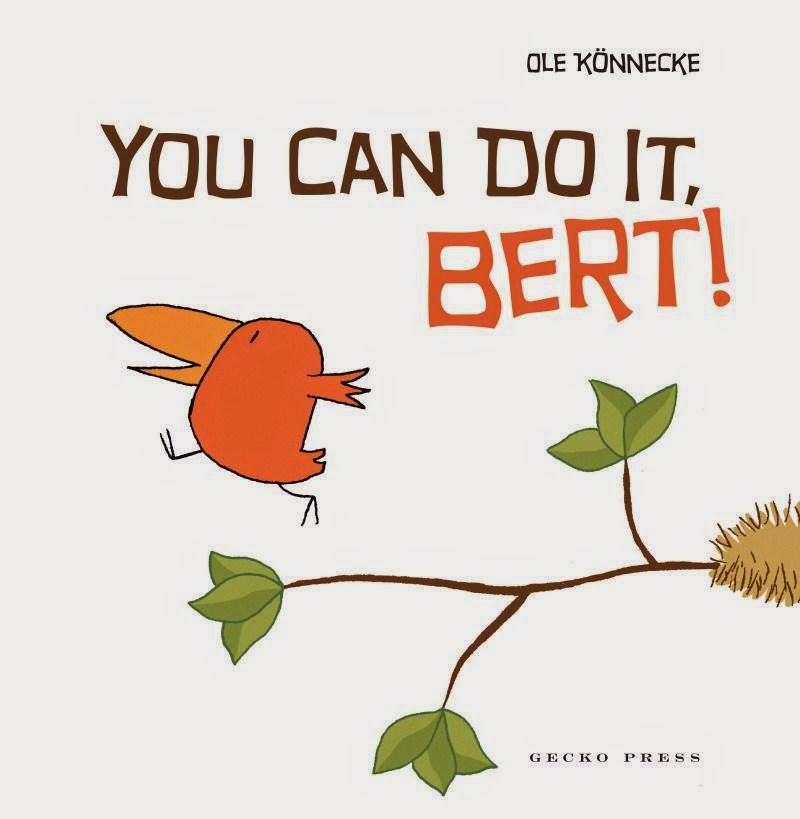

Kids' Book Review Review You Can Do It, Bert!

BERT doesn't use the decoder: As noted, BERT doesn't use the decoder part of the vanilla Transformer architecture. So, the output of BERT is an embedding, not a textual output. This is important - if the output is an embedding, it means that whatever you use BERT for you'll need to do something with the embedding.

The Importance of Being Ernie (and Bert) Macmillan

Bert Ross & Diana (Heimer) Miller, Jr., of Quincy, are celebrating their 40th wedding anniversary with a possible gathering at a later date. We ask that you bring no gifts.

BeRT &YOU YouTube

Bert & I is the name given to numerous collections of humor stories set in the "Down East" culture of traditional Maine. These stories were made famous and mostly written by the humorist storytelling team of Marshall Dodge (1935-1982) and Bob Bryan (1931-2018) in the 1950s and the 1960s and in later years through retellings by Allen Wicken.